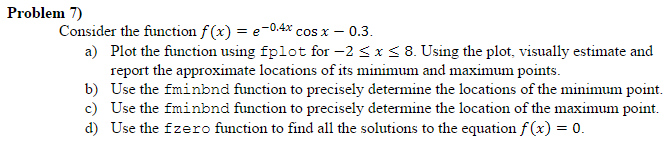

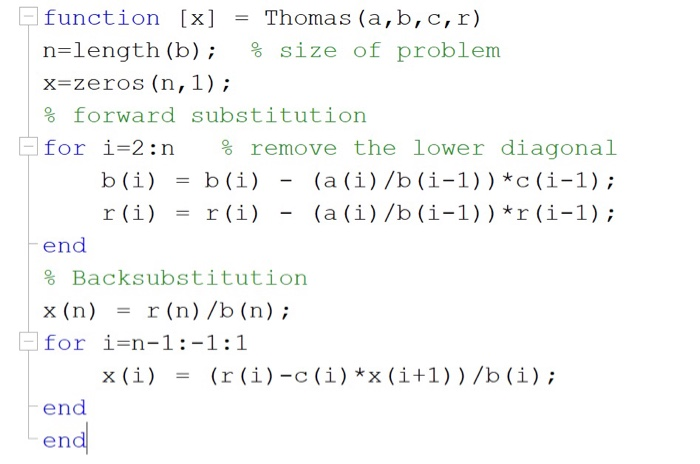

Trust-region size if it does not accept the trial step, when f( x + s) ≥į( x). The solver repeats these four steps until convergence, adjusting he trust-regionĭimension Δ according to standard rules. The solver defines S as the linear space spanned byĪn approximate Newton direction, that is, a solution toįormulate the two-dimensional trust-region subproblem. The solver determines the two-dimensional subspace S with theĪid of a preconditioned conjugate gradient method (described in the next section). In the subspace, the problem is only two-dimensional. Work to solve Equation 1 is trivial because, After the solver computes the subspace S, the Trust-region subproblem to a two-dimensional subspace S ( and ). Optimization Toolbox solvers follow an approximation approach that restricts the On Equation 1, have been proposed in the literature ( and ). Several approximation and heuristic strategies, based However, this requires time proportional to

Such an algorithm provides an accurate solution to Equation 1. To solve Equation 1, an algorithm (see ) can compute all eigenvalues of H and then apply a Newton Symmetric matrix of second derivatives), D is a diagonal scaling Where g is the gradient of f at the current Mathematically, the trust-region subproblem is typically stated min , The neighborhood N is usually spherical or ellipsoidal in shape. Terms of the Taylor approximation to F at x. In the standard trust-region method ( ), the quadratic approximation q is defined by the first two N, and how accurately to solve the trust-region

#F solve matlab how to#

X), how to choose and modify the trust region

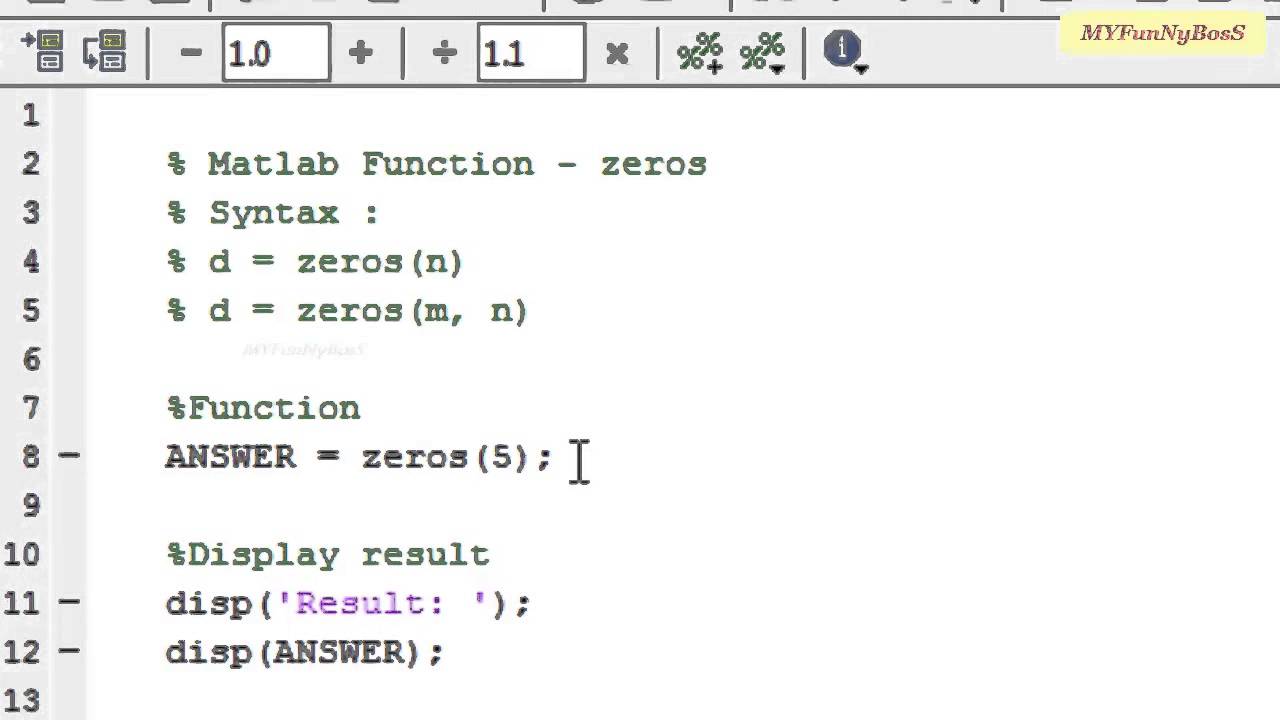

The key questions in defining a specific trust-region approach to minimizingĪpproximation q (defined at the current point Shrinks N (the trust region) and repeats the trial step X + s if f( x + s) < f( x) otherwise, the current point remains unchanged and the solver Minimizing (or approximately minimizing) over N. Reasonably reflects the behavior of function f in a neighborhood Moving to a point with a lower function value. Unconstrained minimization problem, minimizeĪrguments and returns scalars. To understand the trust-region approach to optimization, consider the Many of the methods used in Optimization Toolbox™ solvers are based on trust regions, a simple yet When in doubt, refer to matlab's own handy guide on this topic.All algorithms are large scale see Large-Scale vs. This finite difference routine is also pre-compiled. Which of the above seems like the more computationally efficient option?Īdditionally, if you can compute the gradient, is there any reason why are you using fminsearch instead of fminunc, which carries out gradient based optimization? fminunc will also compute your gradient and Hessian, via finite difference, if you cannot compute them analytically. Then you also use a built in, pre-compiled optimization algorithm to take an optimization step. So you skip on the computing the gradient, which saves time. This whole loop is also presumably not pre-compiled.įminsearch is function that carries out non gradient based optimization. All of this would take place within a for or, or more likely, a while loop that considers max iterations and/or convergence criteria. Presumably, you'd use a self-written, non compiled optimization algorithm for this. Then you'd need to take an optimization step. You'd need to find the gradient w/ respect to your variables. Read the thread below.įsolve is a function that evaluates another function. Edit: Leaving this comment up, even though it isn’t fully accurate.

0 kommentar(er)

0 kommentar(er)